Weird thing I observed in #infosec

There is an incredible amount of disinterest/contempt for #AI amongst many practitioners.

This contempt extends to willful ignorance about the subject.

q.v. "stochastic parrots/bullshit machines" etc.

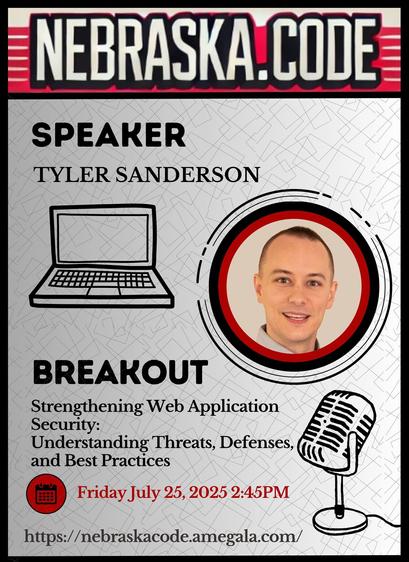

Which, in a field with hundreds of millions of users, strikes me as highly unprofessional. Just the other day I read a blog post by a renown hacker (and likely earned a mute/block) "Why I don't use AI and you should not too".

Connor Leahy, CEO of #conjecture is one of the few credible folks in the field.

But to the question at hand.

The prompts are superbly sanitised.

In part by design, in part due to the fact that you are not connecting to a database but to a multidimensional vector data structure.

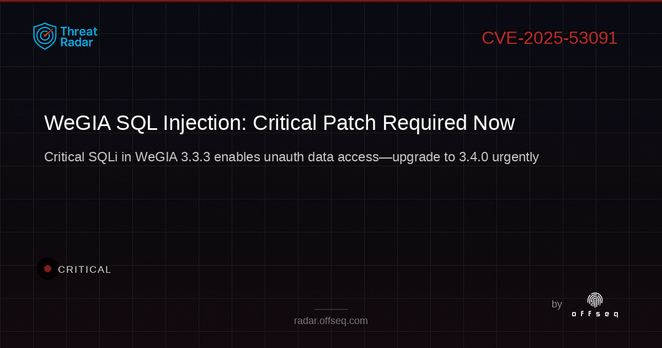

The #prompt is how you get in through the backdoor. Though I haven't looked into fuzzing, but I suspect because of the tech, the old #sqlinjection tek and similar will not work.

Long story short; It is literally impossible to build a secure #AI. By the virtue of the tech.

#promptengineering is the key to open the back door to the knowledge tree.

Then of course there are local models you can train on your own datasets. Including a stack of your old #2600magazine